In this post we'll walk you through the learnings we've made when setting up image-based classification using Google Vision and Google Vertex AI. We'll show the process and go over some of the related considerations for costs and performance.

Iconfinder is just like many other tech companies; continuously evolving while we improve the product, but also optimize internal processes. Through the years, we've always tried to do things manually before automating them. By solving a task by hand first, you'll learn which parts are the most time-consuming and which would be the easiest to automate.

As you might know, we have millions of icons uploaded by designers worldwide. All these icons (now also including illustrations and stickers) are submitted along with tags that are the keywords we use to make them searchable in our search engine. Try it if you haven't already.

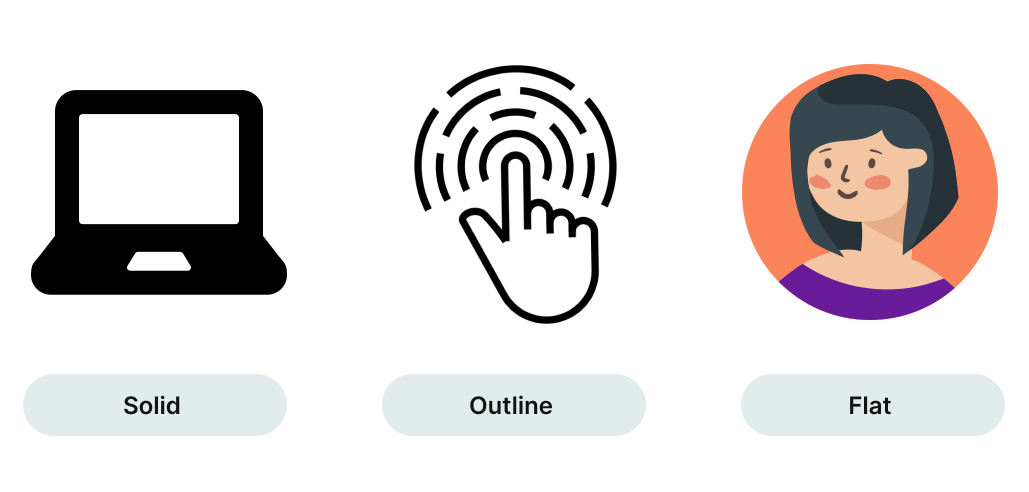

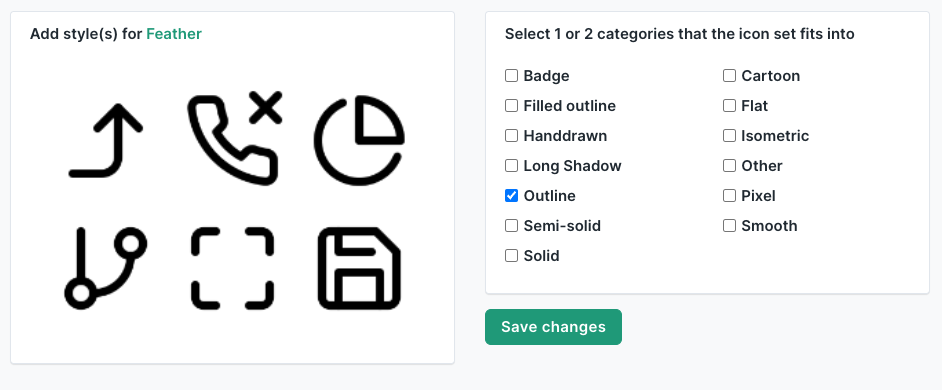

The designers themselves provide some of the data needed for making the content searchable; they group them in packs and add tags. Our team adds other metadata. This includes categories, e.g., "Finance," "Animals," and "Transportation." Another dimension of metadata is the icon style. Icons can be assigned one (or in some cases, two) from 13 styles, such as "Solid," "Outline," "Flat," "Handdrawn," etc.

We had a backlog of more than 80,000 icon packs (including millions of icons that were missing the style metadata). With a simple UI for assigning styles, it would take an employee about three seconds to assign a style to a pack. With the backlog, this would result in two weeks of tedious work. And we would still not have a scalable solution.

In the past, we've solved problems such as fraud detection using machine learning, so when we couldn't keep up with adding styles and categories to incoming content manually, we decided to try solving it with machine learning as well.

In this post, we'll go through the steps we took along with the learnings we've made. Hopefully, you'll find a few ways to save some time for your next project. We went through a proof of concept using Google Vision and ended with using Vertex AI in Google Cloud.

Proof of concept using Google Vision

Before investing too much time in developing an ML-based solution, we needed to evaluate different options as well as determine the quality of our training data. Since we had some icon packs classified already, we had a starting point for the training data.

We considered a few options for third-party tools that would allow us to classify data using image data. We've played around with Clarifai, which comes with a nice UI, but looking at their pricing model, we would quickly end up with an expensive setup.

We then looked at the Google Vision tool, which seemed to have a better pricing model. Also, it would be possible to export a trained model and run it on a server on, e.g., Heroku (which we currently use for web hosting and much more).

A third option was training our own model using PyTorch. Based on an initial experiment, we quickly realized that getting good results using PyTorch would be very time-consuming and not suitable for this project.

In the end, we decided that Google Vision would be the best option in terms of the quality of the results and price.

Training

The training itself was straightforward with Google Vision. We uploaded the image data to Google Cloud and generated a CSV file with the paths to the images and their labels. Then it was possible to train a new model using the Google Vision UI. It took a few hours to import around 80,000 images.

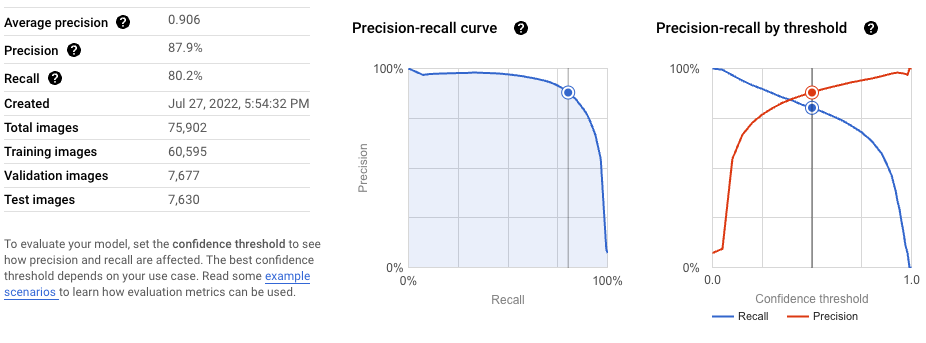

Evaluating results

This is a classic exercise in machine learning, and Google Vision provided some handy charts to get an idea of the model's performance quickly.

To evaluate the model, we looked at the confidence (a score from 0 to 1 given by the model), the recall (how many of each label we correctly predicted out of all samples of that label, in percent) and the precision (or accuracy—it is the percentage where the model predicted the right label).

By setting a confidence threshold of 0.4—so only looking at results with a confidence of 0.4 or higher—we would have a recall and precision of around 84%. We wanted to favor precision at the cost of a lower recall, so we also evaluated the results with an increased confidence threshold. For example, at 0.7 confidence, we'd have 93% precision with 71% recall. Very promising!

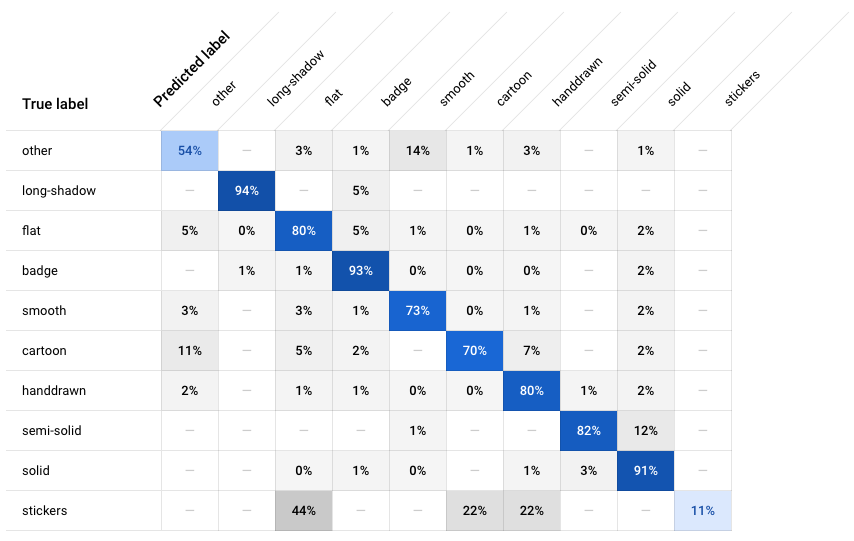

The table below shows how often the model classified each label correctly (in blue), and which labels were most often confused for that label (in gray).

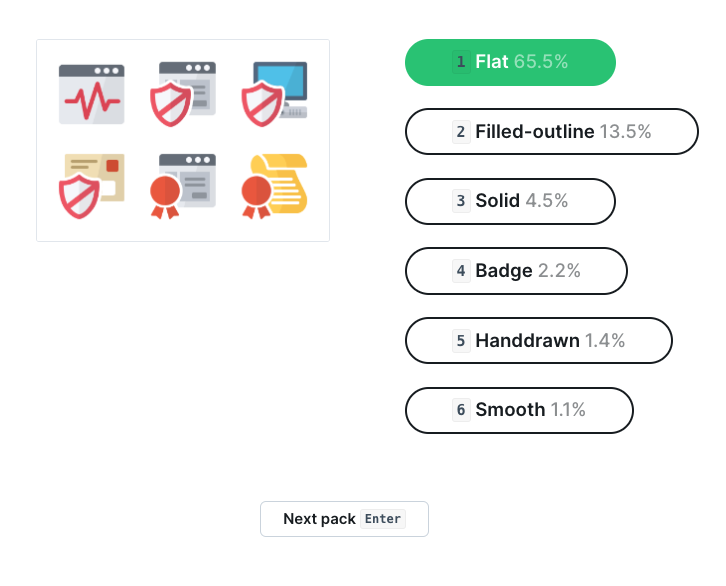

As mentioned in the beginning, we like to do some things manually before automating them. So when we found that we had a model that gave us good enough results to be applied, we decided to build a small UI that would allow us to do some basic quality assurance.

Building a simple UI for quality assurance

Instead of simply applying the new styles to the database, we wrote a specific table that holds predictions. A UI was set up for employees to see the predictions, and only after verifying that they were correct was it applied to the icon data.

By doing this, we could tell which threshold would be good to set for a style before applying it automatically. For example, all icons with a style predicted above 60% would be applied automatically. If it was below, we would have a manual check.

Cost considerations

Google Vision's solution was priced by the number of predictions, whereas the Vertex AI was based on hours we had the model running. This was cheaper for us, and the performance was very similar. The price difference for making predictions for 3 million icons would be $4,500 when paying by predictions compared to $380 with Vertex AI when paying by the hour (and this was even covered by the free trial for us).

Unfortunately, even with the cheaper option using Vertex AI, the costs of having the model deployed all the time were too high for us. For a month's worth of content, we would only need around 3 hours to categorize everything.

And since we pay by the hour, it did not make sense to pay for the entire month as it's a difference of ~$1,000 for a month compared to roughly $5 for 3 hours.

While it's super easy to deploy and un-deploy the model on Vertex AI, we are in a situation where we have to do that when we want new content categorized manually. It's not optimal, but much cheaper.

Running an exported model

An option we explored also was to export a trained model from Google Vision and run it on a server on Heroku. This would allow us to not pay per request but, instead, have a fixed hourly price for the server. But we found that the model Google Vision allows you to export is a lightweight version, resulting in much lower accuracy.

Conclusion

To conclude, it's straightforward and fast to train new models with Google Vertex AI with impressive performances that would probably take a lot longer to match if we had trained the model ourselves. The price is low if we go in the direction of manually deploying it for a few hours once in a while.

The main drawback is not being able to download the full model. That would enable us to host it ourselves at a very low price and have it running all the time to avoid the manual work of deploying/undeploying the model when we need it. This was also a time-consuming task to set up and try to understand why the results were not as good as advertised. Google was not very vocal about mentioning the worse performance of the edge models, and in our experience with image data, going with the hosted solution was the preferred option.

By Nikolaj Rathcke

By Nikolaj Rathcke